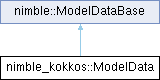

#include <nimble_kokkos_model_data.h>

Public Member Functions | |

| ModelData () | |

| ~ModelData () override | |

| int | AllocateNodeData (nimble::Length length, std::string label, int num_objects) override |

| Allocate data storage for a node-based quantity. | |

| int | GetFieldId (const std::string &field_label) const override |

| Returns the field ID for a specific label. | |

| void | InitializeBlocks (nimble::DataManager &data_manager, const std::shared_ptr< nimble::MaterialFactoryBase > &material_factory_base) override |

| Initialize the different blocks in the mesh. | |

| void | UpdateStates (const nimble::DataManager &data_manager) override |

| Copy time state (n+1) into time state (n) | |

| nimble::Viewify< 1 > | GetScalarNodeData (int field_id) override |

| Get view of scalar quantity defined on nodes. | |

| nimble::Viewify< 2 > | GetVectorNodeData (int field_id) override |

| Get view of vector quantity defined on nodes. | |

| void | ComputeLumpedMass (nimble::DataManager &data_manager) override |

| Compute the lumped mass. | |

| void | InitializeExodusOutput (nimble::DataManager &data_manager) override |

| void | WriteExodusOutput (nimble::DataManager &data_manager, double time_current) override |

| Write output of simulation in Exodus format. | |

| void | ComputeInternalForce (nimble::DataManager &data_manager, double time_previous, double time_current, bool is_output_step, const nimble::Viewify< 2 > &displacement, nimble::Viewify< 2 > &force) override |

| Compute the internal force. | |

| void | UpdateWithNewVelocity (nimble::DataManager &data_manager, double dt) override |

| Update model with new velocity. | |

| void | UpdateWithNewDisplacement (nimble::DataManager &data_manager, double dt) override |

| Update model with new displacement. | |

| int | AllocateElementData (int block_id, nimble::Length length, std::string label, int num_objects) |

| int | AllocateIntegrationPointData (int block_id, nimble::Length length, std::string label, int num_objects, std::vector< double > initial_value=std::vector< double >()) |

| std::vector< int > | GetBlockIds () const |

| std::vector< std::string > | GetScalarNodeDataLabels () const |

| std::vector< std::string > | GetVectorNodeDataLabels () const |

| std::vector< std::string > | GetSymmetricTensorIntegrationPointDataLabels (int block_id) const |

| std::vector< std::string > | GetFullTensorIntegrationPointDataLabels (int block_id) const |

| HostScalarNodeView | GetHostScalarNodeData (int field_id) |

| HostVectorNodeView | GetHostVectorNodeData (int field_id) |

| HostSymTensorIntPtView | GetHostSymTensorIntegrationPointData (int block_id, int field_id, nimble::Step step) |

| HostFullTensorIntPtView | GetHostFullTensorIntegrationPointData (int block_id, int field_id, nimble::Step step) |

| HostScalarElemView | GetHostScalarElementData (int block_id, int field_id) |

| HostSymTensorElemView | GetHostSymTensorElementData (int block_id, int field_id) |

| HostFullTensorElemView | GetHostFullTensorElementData (int block_id, int field_id) |

| DeviceScalarNodeView | GetDeviceScalarNodeData (int field_id) |

| DeviceVectorNodeView | GetDeviceVectorNodeData (int field_id) |

| DeviceSymTensorIntPtView | GetDeviceSymTensorIntegrationPointData (int block_id, int field_id, nimble::Step step) |

| DeviceFullTensorIntPtView | GetDeviceFullTensorIntegrationPointData (int block_id, int field_id, nimble::Step step) |

| DeviceScalarIntPtView | GetDeviceScalarIntegrationPointData (int block_id, int field_id, nimble::Step step) |

| DeviceVectorIntPtView | GetDeviceVectorIntegrationPointData (int block_id, int field_id, nimble::Step step) |

| DeviceScalarElemView | GetDeviceScalarElementData (int block_id, int field_id) |

| DeviceSymTensorElemView | GetDeviceSymTensorElementData (int block_id, int field_id) |

| DeviceFullTensorElemView | GetDeviceFullTensorElementData (int block_id, int field_id) |

| DeviceScalarNodeGatheredView | GatherScalarNodeData (int field_id, int num_elements, int num_nodes_per_element, const DeviceElementConnectivityView &elem_conn_d, DeviceScalarNodeGatheredView gathered_view_d) |

| DeviceVectorNodeGatheredView | GatherVectorNodeData (int field_id, int num_elements, int num_nodes_per_element, const DeviceElementConnectivityView &elem_conn_d, DeviceVectorNodeGatheredView gathered_view_d) |

| void | ScatterScalarNodeData (int field_id, int num_elements, int num_nodes_per_element, const DeviceElementConnectivityView &elem_conn_d, const DeviceScalarNodeGatheredView &gathered_view_d) |

| void | ScatterVectorNodeData (int field_id, int num_elements, int num_nodes_per_element, const DeviceElementConnectivityView &elem_conn_d, const DeviceVectorNodeGatheredView &gathered_view_d) |

| void | ScatterScalarNodeDataUsingKokkosScatterView (int field_id, int num_elements, int num_nodes_per_element, const DeviceElementConnectivityView &elem_conn_d, const DeviceScalarNodeGatheredView &gathered_view_d) |

Public Member Functions inherited from nimble::ModelDataBase Public Member Functions inherited from nimble::ModelDataBase | |

| ModelDataBase ()=default | |

| Constructor. | |

| virtual | ~ModelDataBase ()=default |

| Destructor. | |

| int | GetFieldIdChecked (const std::string &field_label) const |

| virtual void | InitializeBlocks (nimble::DataManager &data_manager, const std::shared_ptr< nimble::MaterialFactoryBase > &material_factory_base)=0 |

| Initialize the different blocks in the mesh. | |

| nimble::Viewify< 1 > | GetScalarNodeData (const std::string &label) |

| Get view of scalar quantity defined on nodes. | |

| nimble::Viewify< 2 > | GetVectorNodeData (const std::string &label) |

| Get view of vector quantity defined on nodes. | |

| virtual void | ComputeExternalForce (nimble::DataManager &data_manager, double time_previous, double time_current, bool is_output_step) |

| Compute the external force. | |

| virtual void | ApplyInitialConditions (nimble::DataManager &data_manager) |

| Apply initial conditions. | |

| virtual void | ApplyKinematicConditions (nimble::DataManager &data_manager, double time_current, double time_previous) |

| Apply kinematic conditions. | |

| int | GetDimension () const |

| Get the spatial dimension. | |

| void | SetCriticalTimeStep (double time_step) |

| Set the critical time step. | |

| void | SetDimension (int dim) |

| Set spatial dimension. | |

| void | SetReferenceCoordinates (const nimble::GenesisMesh &mesh) |

| Set reference coordinates. | |

| double | GetCriticalTimeStep () const |

| Get the critical time step. | |

| const std::vector< std::string > & | GetNodeDataLabelsForOutput () const |

| const std::map< int, std::vector< std::string > > & | GetElementDataLabels () const |

| const std::map< int, std::vector< std::string > > & | GetElementDataLabelsForOutput () const |

| const std::map< int, std::vector< std::string > > & | GetDerivedElementDataLabelsForOutput () const |

Protected Types | |

| using | Data = std::unique_ptr<FieldBase> |

Protected Member Functions | |

| void | InitializeGatheredVectors (const nimble::GenesisMesh &mesh_) |

| void | InitializeBlockData (nimble::DataManager &data_manager) |

| Initialize block data for material information. | |

| template<FieldType ft> | |

| Field< ft >::View | GetDeviceElementData (int block_id, int field_id) |

| template<FieldType ft> | |

| Field< ft >::View | GetDeviceIntPointData (int block_id, int field_id, nimble::Step step) |

Member Typedef Documentation

◆ Data

|

protected |

Constructor & Destructor Documentation

◆ ModelData()

| nimble_kokkos::ModelData::ModelData | ( | ) |

◆ ~ModelData()

|

override |

Member Function Documentation

◆ AllocateElementData()

| int nimble_kokkos::ModelData::AllocateElementData | ( | int | block_id, |

| nimble::Length | length, | ||

| std::string | label, | ||

| int | num_objects ) |

◆ AllocateIntegrationPointData()

| int nimble_kokkos::ModelData::AllocateIntegrationPointData | ( | int | block_id, |

| nimble::Length | length, | ||

| std::string | label, | ||

| int | num_objects, | ||

| std::vector< double > | initial_value = std::vector<double>() ) |

◆ AllocateNodeData()

|

overridevirtual |

Allocate data storage for a node-based quantity.

- Parameters

-

length label num_objects

- Returns

- Field ID for the data allocated

Implements nimble::ModelDataBase.

◆ ComputeInternalForce()

|

overridevirtual |

Compute the internal force.

- Parameters

-

[in] data_manager [in] time_previous [in] time_current [in] is_output_step [in] displacement [out] internal_force Output for internal force

Reimplemented from nimble::ModelDataBase.

◆ ComputeLumpedMass()

|

overridevirtual |

Compute the lumped mass.

- Parameters

-

data_manager Reference to the data manager

Implements nimble::ModelDataBase.

◆ GatherScalarNodeData()

| DeviceScalarNodeGatheredView nimble_kokkos::ModelData::GatherScalarNodeData | ( | int | field_id, |

| int | num_elements, | ||

| int | num_nodes_per_element, | ||

| const DeviceElementConnectivityView & | elem_conn_d, | ||

| DeviceScalarNodeGatheredView | gathered_view_d ) |

◆ GatherVectorNodeData()

| DeviceVectorNodeGatheredView nimble_kokkos::ModelData::GatherVectorNodeData | ( | int | field_id, |

| int | num_elements, | ||

| int | num_nodes_per_element, | ||

| const DeviceElementConnectivityView & | elem_conn_d, | ||

| DeviceVectorNodeGatheredView | gathered_view_d ) |

◆ GetBlockIds()

| std::vector< int > nimble_kokkos::ModelData::GetBlockIds | ( | ) | const |

◆ GetDeviceElementData()

|

protected |

◆ GetDeviceFullTensorElementData()

| DeviceFullTensorElemView nimble_kokkos::ModelData::GetDeviceFullTensorElementData | ( | int | block_id, |

| int | field_id ) |

◆ GetDeviceFullTensorIntegrationPointData()

| DeviceFullTensorIntPtView nimble_kokkos::ModelData::GetDeviceFullTensorIntegrationPointData | ( | int | block_id, |

| int | field_id, | ||

| nimble::Step | step ) |

◆ GetDeviceIntPointData()

|

protected |

◆ GetDeviceScalarElementData()

| DeviceScalarElemView nimble_kokkos::ModelData::GetDeviceScalarElementData | ( | int | block_id, |

| int | field_id ) |

◆ GetDeviceScalarIntegrationPointData()

| DeviceScalarIntPtView nimble_kokkos::ModelData::GetDeviceScalarIntegrationPointData | ( | int | block_id, |

| int | field_id, | ||

| nimble::Step | step ) |

◆ GetDeviceScalarNodeData()

| DeviceScalarNodeView nimble_kokkos::ModelData::GetDeviceScalarNodeData | ( | int | field_id | ) |

◆ GetDeviceSymTensorElementData()

| DeviceSymTensorElemView nimble_kokkos::ModelData::GetDeviceSymTensorElementData | ( | int | block_id, |

| int | field_id ) |

◆ GetDeviceSymTensorIntegrationPointData()

| DeviceSymTensorIntPtView nimble_kokkos::ModelData::GetDeviceSymTensorIntegrationPointData | ( | int | block_id, |

| int | field_id, | ||

| nimble::Step | step ) |

◆ GetDeviceVectorIntegrationPointData()

| DeviceVectorIntPtView nimble_kokkos::ModelData::GetDeviceVectorIntegrationPointData | ( | int | block_id, |

| int | field_id, | ||

| nimble::Step | step ) |

◆ GetDeviceVectorNodeData()

| DeviceVectorNodeView nimble_kokkos::ModelData::GetDeviceVectorNodeData | ( | int | field_id | ) |

◆ GetFieldId()

|

inlineoverridevirtual |

Returns the field ID for a specific label.

- Parameters

-

field_label Label for a stored quantity

- Returns

- Field ID to identify the data storage

Implements nimble::ModelDataBase.

◆ GetFullTensorIntegrationPointDataLabels()

| std::vector< std::string > nimble_kokkos::ModelData::GetFullTensorIntegrationPointDataLabels | ( | int | block_id | ) | const |

◆ GetHostFullTensorElementData()

| HostFullTensorElemView nimble_kokkos::ModelData::GetHostFullTensorElementData | ( | int | block_id, |

| int | field_id ) |

◆ GetHostFullTensorIntegrationPointData()

| HostFullTensorIntPtView nimble_kokkos::ModelData::GetHostFullTensorIntegrationPointData | ( | int | block_id, |

| int | field_id, | ||

| nimble::Step | step ) |

◆ GetHostScalarElementData()

| HostScalarElemView nimble_kokkos::ModelData::GetHostScalarElementData | ( | int | block_id, |

| int | field_id ) |

◆ GetHostScalarNodeData()

| HostScalarNodeView nimble_kokkos::ModelData::GetHostScalarNodeData | ( | int | field_id | ) |

◆ GetHostSymTensorElementData()

| HostSymTensorElemView nimble_kokkos::ModelData::GetHostSymTensorElementData | ( | int | block_id, |

| int | field_id ) |

◆ GetHostSymTensorIntegrationPointData()

| HostSymTensorIntPtView nimble_kokkos::ModelData::GetHostSymTensorIntegrationPointData | ( | int | block_id, |

| int | field_id, | ||

| nimble::Step | step ) |

◆ GetHostVectorNodeData()

| HostVectorNodeView nimble_kokkos::ModelData::GetHostVectorNodeData | ( | int | field_id | ) |

◆ GetScalarNodeData()

|

overridevirtual |

Get view of scalar quantity defined on nodes.

- Parameters

-

field_id the field id (see DataManager::GetFieldIDs())

- Returns

- Viewify<1> object for scalar quantity

Implements nimble::ModelDataBase.

◆ GetScalarNodeDataLabels()

| std::vector< std::string > nimble_kokkos::ModelData::GetScalarNodeDataLabels | ( | ) | const |

◆ GetSymmetricTensorIntegrationPointDataLabels()

| std::vector< std::string > nimble_kokkos::ModelData::GetSymmetricTensorIntegrationPointDataLabels | ( | int | block_id | ) | const |

◆ GetVectorNodeData()

|

overridevirtual |

Get view of vector quantity defined on nodes.

- Parameters

-

field_id the field id (see DataManager::GetFieldIDs())

- Returns

- Viewify<2> object for vector quantity

Implements nimble::ModelDataBase.

◆ GetVectorNodeDataLabels()

| std::vector< std::string > nimble_kokkos::ModelData::GetVectorNodeDataLabels | ( | ) | const |

◆ InitializeBlockData()

|

protected |

Initialize block data for material information.

- Parameters

-

data_manager

◆ InitializeBlocks()

|

override |

Initialize the different blocks in the mesh.

- Parameters

-

data_manager Reference to the data manager material_factory_base Shared pointer to the material factory

◆ InitializeExodusOutput()

|

overridevirtual |

Reimplemented from nimble::ModelDataBase.

◆ InitializeGatheredVectors()

|

protected |

◆ ScatterScalarNodeData()

| void nimble_kokkos::ModelData::ScatterScalarNodeData | ( | int | field_id, |

| int | num_elements, | ||

| int | num_nodes_per_element, | ||

| const DeviceElementConnectivityView & | elem_conn_d, | ||

| const DeviceScalarNodeGatheredView & | gathered_view_d ) |

◆ ScatterScalarNodeDataUsingKokkosScatterView()

| void nimble_kokkos::ModelData::ScatterScalarNodeDataUsingKokkosScatterView | ( | int | field_id, |

| int | num_elements, | ||

| int | num_nodes_per_element, | ||

| const DeviceElementConnectivityView & | elem_conn_d, | ||

| const DeviceScalarNodeGatheredView & | gathered_view_d ) |

◆ ScatterVectorNodeData()

| void nimble_kokkos::ModelData::ScatterVectorNodeData | ( | int | field_id, |

| int | num_elements, | ||

| int | num_nodes_per_element, | ||

| const DeviceElementConnectivityView & | elem_conn_d, | ||

| const DeviceVectorNodeGatheredView & | gathered_view_d ) |

◆ UpdateStates()

|

overridevirtual |

Copy time state (n+1) into time state (n)

- Parameters

-

data_manager Reference to the data manager

Implements nimble::ModelDataBase.

◆ UpdateWithNewDisplacement()

|

overridevirtual |

Update model with new displacement.

- Parameters

-

[in] data_manager Reference to the data manager [in] dt Current time step

- Note

- This routine wll synchronize the host and device displacements.

Reimplemented from nimble::ModelDataBase.

◆ UpdateWithNewVelocity()

|

overridevirtual |

Update model with new velocity.

- Parameters

-

[in] data_manager Reference to the data manager [in] dt Current time step

- Note

- This routine wll synchronize the host and device velocities.

Reimplemented from nimble::ModelDataBase.

◆ WriteExodusOutput()

|

overridevirtual |

Write output of simulation in Exodus format.

- Parameters

-

[in] data_manager Reference to data manager [in] time_current Time value

Reimplemented from nimble::ModelDataBase.

Member Data Documentation

◆ block_data_

|

protected |

◆ block_id_to_element_data_index_

|

protected |

◆ block_id_to_integration_point_data_index_

|

protected |

◆ blocks_

|

protected |

Blocks.

◆ device_element_data_

|

protected |

◆ device_integration_point_data_step_n_

|

protected |

◆ device_integration_point_data_step_np1_

|

protected |

◆ device_node_data_

|

protected |

◆ displacement_d_

|

protected |

◆ displacement_h_

|

protected |

◆ exodus_output_manager_

|

protected |

◆ field_id_to_device_element_data_index_

|

protected |

◆ field_id_to_device_integration_point_data_index_

|

protected |

◆ field_id_to_device_node_data_index_

|

protected |

◆ field_id_to_host_element_data_index_

|

protected |

◆ field_id_to_host_integration_point_data_index_

|

protected |

◆ field_id_to_host_node_data_index_

|

protected |

◆ field_label_to_field_id_map_

|

protected |

◆ gathered_contact_force_d

|

protected |

◆ gathered_displacement_d

|

protected |

◆ gathered_internal_force_d

|

protected |

◆ gathered_reference_coordinate_d

|

protected |

◆ host_element_data_

|

protected |

◆ host_integration_point_data_step_n_

|

protected |

◆ host_integration_point_data_step_np1_

|

protected |

◆ host_node_data_

|

protected |

◆ velocity_d_

|

protected |

◆ velocity_h_

|

protected |

The documentation for this class was generated from the following files:

Generated by